Author: Manikanta Kuna

Website: www.Manikantakuna.com

What Are Generative AI Architectures?

Generative AI architectures are the blueprints that define how AI models learn, think, and generate new content.

If the AI model is the “brain,”

the architecture is the neural structure and information flow inside it.

These architectures evolved over time — from simple sequential models → to the powerful transformers & diffusion models used today.

Evolution Timeline of Generative AI Models

| Generation | Architecture Type | Year | Strength | Status |

|---|---|---|---|---|

| 1️⃣ | RNNs, LSTMs, GRUs | 1980s–2010s | Sequential understanding | Largely replaced |

| 2️⃣ | VAEs | 2013+ | Latent space learning | Used in niche domains |

| 3️⃣ | GANs | 2014+ | Photorealistic images | Popular but decreasing |

| 4️⃣ | Autoregressive Models | 2014+ | Next-step prediction | Core of LLMs |

| 5️⃣ | Diffusion Models | 2018+ | High-quality images/video | Rising rapidly |

| 6️⃣ | Transformers | 2017+ | Language + multimodal | Dominant in 2025 |

| 7️⃣ | Hybrid Models | 2020+ | Best of multiple models | Future direction |

RNNs / LSTMs / GRUs — Legacy Sequence Models

📌 Used for: Early chatbots, speech recognition, music generation

📌 Limitation: Forget long context, slow training

Why they existed:

Normal neural networks cannot remember past words.

RNNs introduced memory, LSTMs improved long-term context.

💡 Example:

Predict next word in: “The cat sat on the…”

🔚 Mostly replaced by Transformers.

Variational Autoencoders (VAEs) — Latent Space Creators

📌 Used for:

- Medical image anomaly detection

- Style variations

- Latent compression (used in Stable Diffusion)

They encode → sample → decode to generate new content.

Strength:

✔ Smooth latent space for interpolation

Limitation:

❌ Outputs sometimes blurry

GANs — The Fake vs Real Battle

Two networks fight:

| Generator | Creates fake samples |

|---|---|

| Discriminator | Detects fake vs real |

Famous Applications:

- DeepFakes

- StyleGAN faces

- Super-resolution

- ArtBreeder

⚠️ Training is unstable → mode collapse is common

⬇

Diffusion models gradually replacing GANs.

Autoregressive Models — Token-by-Token Generation

Predict next element using previous sequence.

Examples:

- GPT (language)

- PixelCNN (images)

- WaveNet (speech)

Core principle behind LLMs:

GPT = Autoregressive Transformer

Diffusion Models — The New King of Images & Video

Process:

1️⃣ Take an image → add noise

2️⃣ Model learns to reverse noise

3️⃣ Generate realistic content from pure noise

Applications:

- Stable Diffusion

- MidJourney

- DALL·E 3

- Runway video models

Strength:

✔ Produces stunning visuals

✔ More stable than GANs

❌ Slower generation

💡 Future AI movies will rely on diffusion + transformers.

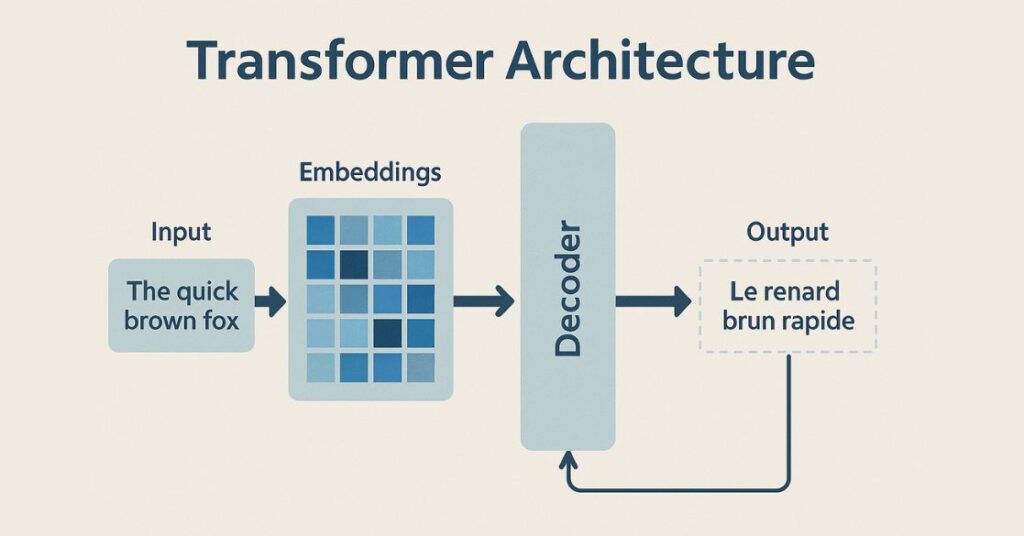

Transformers — The Brain Behind LLMs & Multimodal Models

📌 Introduced: 2017 “Attention is All You Need”

📌 Core idea: Self-attention → model understands relationships between all tokens in parallel

Dominating Every Sector:

| Domain | Models |

|---|---|

| Language | GPT-4/5, Claude, Gemini, LLaMA |

| Code | GitHub Copilot |

| Vision | Vision Transformers |

| Multimodal | GPT-4o, LLaVA |

Why they rule:

✔ Handle long context

✔ Scale to billions of parameters

✔ Parallel training = Faster, smarter, cheaper

➡️ Today’s LLMs, agents, enterprise AI all run on transformers.

Hybrid Models — Best of All Worlds

Architecture combinations such as:

| Hybrid | Used in |

|---|---|

| VAE + Diffusion + Transformers | Stable Diffusion |

| Transformers + Diffusion | Sora (OpenAI Video) |

| Autoregressive + Diffusion | High-fidelity speech models |

Purpose:

- Control + Realism + Multimodal capability

➡️ Future Generative AI = Hybrid systems

Summary Table

| Architecture | Best For | Still Popular? |

|---|---|---|

| RNN / LSTM | Legacy speech/text | ❌ |

| VAE | Latent manipulation, medical | ⚠️ Specific |

| GAN | Photorealistic images | ⚠️ Declining |

| Autoregressive | Language, audio | ✔ Core LLM logic |

| Diffusion | Image/video generation | 🔥 Leading in visual AI |

| Transformers | Everything | ⭐ Dominant |

| Hybrids | AI Agents + Video | 🚀 Future |

Final Thought

Each generation solved a major bottleneck:

Sequence → Long-context → Visual realism → Multimodal intelligence → Autonomous Agents

➡️ Transformers + Diffusion are shaping the AI systems of the next decade.

➡️ Hybrid multimodal agents will outperform humans in many tasks.